Coping with the Limitations of Algorithm Power:Branch and Bound Searching Strategies.

Branch and Bound Searching Strategies

Feasible Solution vs. Optimal Solution

• DFS, BFS, hill climbing and best-first search can be used to solve some searching problem for searching a feasible solution.

• However, they cannot be used to solve the optimization problems for searching an (the) optimal solution.

The Branch-and-bound strategy

• This strategy can be used to solve optimization problems without an exhaustive search in the average case.

• 2 mechanisms:

– A mechanism to generate branches when searching the solution space

– A mechanism to generate a bound so that many braches can be terminated

• It is efficient in the average case because many branches can be terminated very early.

• Although it is usually very efficient, a very large tree may be generated in the worst case.

• Many NP-hard problem can be solved by B&B efficiently in the average case; however, the worst case time complexity is still exponential.

Bounding

• A bound on a node is a guarantee that any solution obtained from expanding the node

will be:

– Greater than some number (lower bound)

– Or less than some number (upper bound)

• If we are looking for a minimal optimal, as we are in weighted graph coloring, then we need a lower bound

– For example, if the best solution we have found so far has a cost of 12 and the lower bound on a node is 15 then there is no point in expanding the node

• The node cannot lead to anything better than a 15

• We can compute a lower bound for weighted graph color in the following way:

– The actual cost of getting to the node

– Plus a bound on the future cost

• Min weight color * number of nodes still to color

– That is, the future cost cannot be any better than this

• Recall that we could either perform a depth-first or a breadth-first search

– Without bounding, it didn’t matter which one we used because we had to expand the entire tree to find the optimal solution

– Does it matter with bounding?

• Hint: think about when you can prune via bounding

• We prune (via bounding) when: (currentBestSolutionCost <= nodeBound)

• This tells us that we get more pruning if:

– The currentBestSolution is low

– And the nodeBound is high

• So we want to find a low solution quickly and we want the highest possible lower bound

– One has to factor in the extra computation cost of computing higher lower bounds vs. the expected pruning savings

The Assignment Problem

• In many business situations, management needs to assign - personnel to jobs, - jobs to

machines, - machines to job locations, or - salespersons to territories.

• Consider the situation of assigning n jobs to n machines.

• When a job i (=1,2,....,n) is assigned to machine j (=1,2, .....n) that incurs a cost Cij.

• The objective is to assign the jobs to machines at the least possible total cost.

• This situation is a special case of the Transportation Model And it is known as the assignment problem.

• Here, jobs represent “sources” and machines represent “destinations.”

• The supply available at each source is 1 unit And demand at each destination is 1 unit.

The Assignment Problem Example

• Ballston Electronics manufactures small electrical devices.

• Products are manufactured on five different assembly lines (1,2,3,4,5).

• When manufacturing is finished, products are transported from the assembly lines to one of the five different inspection areas (A,B,C,D,E).

• Transporting products from five assembly lines to five inspection areas requires different times (in minutes)

Under current arrangement, assignment of inspection areas to the assembly lines are 1 to A, 2 to B, 3 to C, 4 to D, and 5 to E. This arrangement requires 10+7+12+17+19 = 65 man minutes.

• Management would like to determine whether some other assignment of production lines to inspection areas may result in less cost.

• This is a typical assignment problem. n = 5 And each assembly line is assigned to each inspection area.

• It would be easy to solve such a problem when n is 5, but when n is large all possible alternative solutions are n!, this becomes a hard problem.

• Assignment problem can be either formulated as a linear programming model, or it can be formulated as a transportation model.

• However, An algorithm known as Hungarian Method has proven to be a quick and efficient way to solve such problems.

Assignment Problem Revisited:

Select one element in each row of the cost matrix C so that:

• no two selected elements are in the same column

• the sum is minimized

Example

Lower bound: Any solution to this problem will have total cost at least: 2 + 3 + 1 + 4 (or 5 + 2 + 1 + 4)

Example: Complete state-space tree

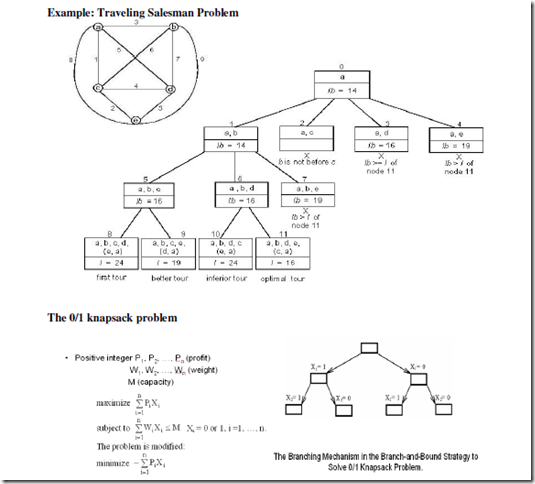

Traveling Salesperson Problem

• This is a classic CS problem

• Given a graph (cities), and weights on the edges (distances) find a minimum weight tour of the cities

– Start in a particular city

– Visit all other cities (exactly once each)

– Return to the starting city

• Cannot be done by brute-force as this is worst-case exponential or worse running time

– So we will look to backtracking with pruning to make it run in a reasonable amount of time in most cases

• We will build our state space by:

– Having our children be all the potential cities we can go to next

– Having the depth of the tree be equal to the number of cities in the graph

• we need to visit each city exactly once

• So given a fully connected set of 5 nodes we have the following state space

– only partially completed

• Now we need to add bounding to this problem

– It is a minimization problem so we need to find a lower bound

• We can use:

– The current cost of getting to the node plus

– An underestimate of the future cost of going through the rest of the cities

• The obvious choice is to find the minimum weight edge in the graph and multiply that edge weight by the number of remaining nodes to travel through

• As an example assume we have the given adjacency matrix

• If we started at node A and have just traveled to node B then we need to compute the bound for node B

– Cost 14 to get from A to B

– Minimum weight in matrix is 2 times 4 more legs to go to get back to node A = 8

– For a grand total of 14 + 8 = 22

How to find the upper bound?

• Ans: by quickly finding a feasible solution in a greedy manner: starting from the smallest

available i, scanning towards the largest i’s until M is exceeded. The upper bound can be calculated.

The 0/1 knapsack problem

• E.g. n = 6, M = 34

• A feasible solution: X1 = 1, X2 = 1, X3 = 0, X4 = 0, X5 = 0, X6 = 0 -(P1+P2) = -16 (upper bound) Any solution higher than -16 can not be an optimal solution.

How to find the lower bound?

• Ans: by relaxing our restriction from Xi = 0 or 1 to 0 ≤ Xi ≤ 1 (knapsack problem)

Approximation Approach

Apply a fast (i.e., a polynomial-time) approximation algorithm to get a solution that is not necessarily optimal but hopefully close to it Accuracy measures:

accuracy ratio of an approximate solution

sa r(sa) = f(sa) / f(s*) for minimization problems

r(sa) = f(s*) / f(sa) for maximization problems

where f(sa) and f(s*) are values of the objective function f for the approximate solution sa and actual optimal solution s*

performance ratio of the algorithm A the lowest upper bound of r(sa) on all instances

Nearest-Neighbor Algorithm for TSP

Starting at some city, always go to the nearest unvisited city, and, after visiting all the cities, return to the starting one

Accuracy: RA = ∞ (unbounded above) – make the length of AD arbitrarily large in the above example

Multifragment-Heuristic Algorithm

Stage 1: Sort the edges in nondecreasing order of weights. Initialize the set of tour edges to be constructed to empty set

Stage 2: Add next edge on the sorted list to the tour, skipping those whose addition would’ve created a vertex of degree 3 or a cycle of length less than n. Repeat this step until a tour of length n is obtained

Note: RA = ∞, but this algorithm tends to produce better tours than the nearest-neighbor algorithm

Twice-Around-the-Tree Algorithm

Stage 1: Construct a minimum spanning tree of the graph(e.g., by Prim’s or Kruskal’s algorithm)

Stage 2: Starting at an arbitrary vertex, create a path that goes twice around the tree and returns to the same vertex

Stage 3: Create a tour from the circuit constructed in Stage 2 by making shortcuts to avoid visiting intermediate vertices more than once

Note: RA = ∞ for general instances, but this algorithm tends to produce better tours than the nearest-neighbor algorithm

Christofides Algorithm

Stage 1: Construct a minimum spanning tree of the graph

Stage 2: Add edges of a minimum-weight matching of all the odd vertices in the minimum spanning tree

Stage 3: Find an Eulerian circuit of the multigraph obtained in Stage 2

Stage 3: Create a tour from the path constructed in Stage 2 by making shortcuts to avoid visiting intermediate vertices more than once

RA = ∞ for general instances, but it tends to produce better tours than the twice-around-the- minimum-tree alg.

Euclidean Instances

Theorem If P ≠ NP, there exists no approximation algorithm for TSP with a finite performance ratio.

Definition An instance of TSP is called Euclidean, if its distances satisfy two conditions:

1. symmetry d[i, j] = d[j, i] for any pair of cities i and j

2. triangle inequality d[i, j] ≤ d[i, k] + d[k, j] for any cities i, j, k

For Euclidean instances:

approx. tour length / optimal tour length ≤ 0.5(rlog2 nl + 1) for nearest neighbor and multifragment heuristic; approx. tour length / optimal tour length ≤ 2 for twice-around-the-tree; approx. tour length / optimal tour length ≤ 1.5 for Christofides

Local Search Heuristics for TSP

Start with some initial tour (e.g., nearest neighbor). On each iteration, explore the current tour’s neighborhood by exchanging a few edges in it. If the new tour is shorter, make it the current tour; otherwise consider another edge change. If no change yields a shorter tour, the current tour is returned as the output.

Greedy Algorithm for Knapsack Problem

Step 1: Order the items in decreasing order of relative values:

v1/w1≥… ≥ vn/wn

Step 2: Select the items in this order skipping those that don’t fit into the knapsack

Accuracy

• RA is unbounded (e.g., n = 2, C = m, w1=1, v1=2, w2=m, v2=m)

• yields exact solutions for the continuous version

Approximation Scheme for Knapsack Problem

Step 1: Order the items in decreasing order of relative values:

v1/w1≥… ≥ vn/wn

Step 2: For a given integer parameter k, 0 ≤ k ≤ n, generate all subsets of k items or less and for each of those that fit the knapsack, add the remaining items in decreasing order of their value to weight ratios

Step 3: Find the most valuable subset among the subsets generated in Step 2 and return it as the algorithm’s output

Bin Packing Problem: First-Fit Algorithm

First-Fit (FF) Algorithm: Consider the items in the order given and place each item in the first available bin with enough room for it; if there are no such bins, start a new one Example: n = 4, s1 = 0.4, s2 = 0.2, s3 = 0.6, s4 = 0.7

Accuracy

• Number of extra bins never exceeds optimal by more than 70% (i.e., RA ≤ 1.7)

• Empirical average-case behavior is much better. (In one experiment with 128,000 bins, the relative error was found to be no more than 2%.)

Bin Packing: First-Fit Decreasing Algorithm

First-Fit Decreasing (FFD) Algorithm: Sort the items in decreasing order (i.e., from the largest to the smallest). Then proceed as above by placing an item in the first bin in which it fits and starting a new bin if there are no such bins

Example: n = 4, s1 = 0.4, s2 = 0.2, s3 = 0.6, s4 = 0.7

Accuracy

• Number of extra bins never exceeds optimal by more than 50% (i.e., RA ≤ 1.5)

• Empirical average-case behavior is much better, too

Comments

Post a Comment