R-trees:Improving Performance.

Improving Performance

Since R-trees were first proposed in [23], many variants and methods to improve the structure and performance of the tree have been proposed. We discuss a few of the more common ones: R∗-trees [3], Hilbert R-trees [29] and several bulk loading algorithms [28, 36, 51]. Other proposals for improving performance include [15, 20, 56, 57].

Given that the known R-tree insertion algorithms are based on heuristic optimization, it is reasonable to assess their merit experimentally. Beckmann et al [3] conducted an extensive experimental study to explore the impact of alternative approaches for leaf selection and node splitting. Based on their experiments, they proposed the R* tree which has become the most commonly implemented R-tree variant.

The R* tree differs from the original Guttman R-tree in three ways.

First, the leaf where a new object is inserted is chosen differently. The path selec- tion algorithm makes use of the concept of overlap of entry Ei in node vj , defined as area(ri ∩ rj ), where m is the number of entries in node vj and ri is the rectangle associated with Ei. When descending from the root, if the next node to be selected is a leaf, the algorithm chooses the node that requires the least increase in overlap, and resolves ties as least area enlargement. If the next node is not a leaf, the entry with the least area enlargement is chosen.

The second difference is the use of forced reinserts. The authors discovered that the initial order of inserts significantly impacts tree quality. They also observed that query performance of an existing R-tree can be improved by removing half of the entries and then re-inserting them. Of course, the authors do not recommend performing a restructuring of this magnitude frequently. Rather, they used this insight to modify the policy for dealing with overflowed nodes. If an insertion causes an overflow, calculate the distance from the center of each of the B + 1 entries to the center of the MBR enclosing all B + 1 entries. Then sort the entries in decreasing order of this distance. Remove the p furthest entries, where p is set to 30% of B, and re-insert the p removed entries into the tree. Some subset of the p re-inserts may be inserted into nodes other than the initial node that overflowed. For each of the p re-inserts, if they do not cause an overflow, do nothing; otherwise, split the node using the algorithm below.

The third difference is in the node splitting algorithm. When a split is needed, the node entries are first sorted twice along each of the d dimensions. The two sorts are based on the low and on the high MBR endpoint values, respectively. Remember that nodes must have a minimum of b and a maximum of B entries. Thus, using one of the sorted lists, the B +1 entries can be partitioned into two groups, S1 and S2, by splitting anyplace after the i-th entry, b ≤ i ≤ B−b+1, of the sorted list. S1 and S2 consist of the entries before and after the split position, respectively. In order to choose the best split, the following three objective functions were considered (for 2-d data) and tested using different combinations:

1. area-value =area(MBR(S1)) + area(MBR(S2))

2. perimeter-value = perimeter(MBR(S1)) + perimeter(MBR(S2))

3. overlap-value = area(MBR(S1) ∩ MBR(S2))

Notice that for a fixed area, the MBR with smallest perimeter is the square.

Based on experiments, the following split policy is adopted. The R* tree computes the perimeter-values for each possible grouping (S1, S2) over both sorted lists of all dimensions and chooses the dimension that yields the minimum perimeter-value. Once the dimension has been chosen, the algorithm then chooses the grouping for that dimension that minimizes the overlap-value.

These three changes were shown to substantially improve the I/O performance for all data sets studied.

The Hilbert R-tree [29] further improves performance by imposing a linear order on the input rectangles that results in MBRs of small area and perimeter. The tree is actually an R-tree augmented with order information. Intersection queries are performed as before, using the standard R-tree algorithm; but as a consequence of the ordering constraints, insertion and deletion can proceed as in B+-trees and there is no longer a need to consider various leaf selection heuristics. Additionally, the linear order allows for effective use of deferred splitting, a technique which improves node utilization and performance as trees require fewer nodes for a given input set.

To define an ordering of the input values, Kamel and Faloutsos [29] propose the use of a space-filling curve, such as the Hilbert curve. The power of these curve lies in its ability to linearly order multidimensional points such that nearby points in this order are also close in multidimensional space. Hilbert curves are not the only reasonable choice. Other curves, such as the Peano or Z-order curve, may also be used.

space-filling curves.

See [52] for a discussion on A d-dimensional Hilbert curve of order k is a curve Hd that visits every vertex of a finite d dimensional grid of size 2k × ... × 2k = 2kd. Its construction can best be viewed as a sequence of stages. At each stage, an instance of the curve of the previous stage is rotated and placed in each of 2d equal-sized sub-quadrants. Endpoints of the 2d sub-curves are then connected to produce the curve at the next stage. The first three stages of the Hilbert curve for two and three dimensions are illustrated in Figures 21.2 and 21.3, respectively.

Each grid vertex is assigned a Hilbert value, which is an integer that corresponds to its position along the curve. For instance, in H2, (0, 0) and (1, 2) have Hilbert values 0 and 7, respectively. This assignment is easily extended to rectangles, in which case the Hilbert value of the grid point closest to the rectangle center is assigned. Algorithms for computing the position of a point along a space filling curve are given in [6, 10, 58].

The structure of the R-tree is modified as follows. Leaf nodes remain the same. Each entry

of an internal node now has the form (r, p, v), where r and p have the same interpretation as before, and v is the largest Hilbert value of all data items stored in the subtree with root p. This assignment results in update algorithms that are similar to those used for B+-trees. In particular, it is straightforward to implement an effective policy for deferred splitting which reduces the number of splits needed while performing insertions. The authors propose the following policy, which they call s-to-(s + 1) splitting. When a node overflows, an attempt is first made to shift entries laterally to s − 1 sibling nodes at the same level. An actual split occurs only if the additional entry cannot be accommodated by shifting, because the s − 1 siblings are already full. When this happens a new node is created and the sB + 1 entries are distributed among the s + 1 nodes. Because entries at the leaves are sorted by Hilbert value, bounding boxes of leaves tend to have small area and perimeter, and node utilization is high. Notice that there is a clear trade-off between node utilization and insertion complexity (which increases as s increases). The case s = 1 corresponds to the regular split policy used in the original R-tree, i.e., split whenever a node overflows.

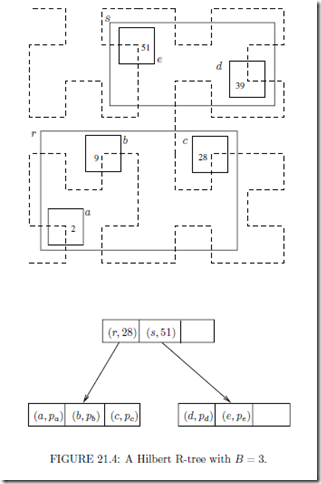

A sample tree with 5 data rectangles is shown in Figure 21.4. There are two leaf nodes and one internal node (the root). The pointer entries of the internal node are represented by arrows. Each input rectangle has been annotated with its Hilbert value. In reality, the corners of the rectangles would fall on grid vertices. They have been made smaller in order to make the figure more readable. Inserting a new rectangle whose center has Hilbert value 17 would cause an overflow in r. With deferred splitting, a split is not necessary. Instead, the new rectangle would be accommodated in r after shifting rectangle c (with Hilbert value 28) to its sibling s.

The authors report improvements of up to 28% in performance over R∗-trees and recommend using 2-to-3 splitting which results in an average node utilization of 82.2%.

In practice, one does not store or compute all bit values on the hypothetical grid. Let β be the number of bits required to describe one coordinate. Storing the Hilbert value of a d-dimensional point requires dβ bits of storage, which may be larger than the size of native machine integers. It is possible to compare the Hilbert values of two points without storing the values explicitly. Conceptually, the process computes bit positions, one at a time, until discrimination is possible. Consider the case of 2-d and notice that the first bit of the x- and y-coordinates of a point determine which quadrant contains it. Successive bits determine which successively smaller sub-quadrants contain the point. When two center points (x1, y1) and (x2, y2) need to be compared, the bits of each coordinate are examined until it can be determined that one of the points lies in a different sub-quadrant than the other (one can use the sense and rotation tables described in [28] to accomplish this task). The information gathered is used to decide which point is closer to the origin (along the Hilbert Curve).

There are applications where the data is static, or does not change very frequently. Even if the data is dynamic, it may happen that an index needs to be constructed for a large data set which is available a priori. In these circumstances, building an R-tree by inserting one object at a time has several disadvantages: (a) high load time, (b) sub-optimal space utilization, and, most important, (c) poor R-tree structure requiring the retrieval of a large number of nodes in order to satisfy a query. As discussed in the previous section, other dynamic algorithms [3, 57] improve the quality of the R-tree, but still are not competitive with regard to query time when compared to loading algorithms that are allowed to pre- process the data to be stored. When done properly, preprocessing results in R-trees with nearly 100% space utilization and improved query times (due to the fact that fewer nodes need to be accessed while performing a query). Such packing algorithms were first proposed by Roussopoulos [51] and later by Kamel and Faloutsos [28], and Leutenegger et al [36]. An approach that is intermediary between inserting a tuple at a time and constructing the entire tree by bulk loading is followed by [12], where an entire batch of input values is processed by partitioning the input into clusters and then inserting R-trees for the clusters into the existing R-tree.

The general approach to bulk loading an R-tree is similar to building a B-tree from a collection of keys by creating the leaf level first and then creating each successively higher level until the root node is created. The general approach is outlined below.

General Algorithm:

1. Sort the n rectangles and partition them into In/Bl consecutive groups of B rectangles. Each group of B rectangles is eventually placed in the same leaf level node. Note that the last group may contain fewer than B rectangles.

2. Load the In/Bl groups of rectangles into nodes and output the (MBR, address) for each leaf level node into a temporary file. The addresses are used as the child pointer fields for the nodes of the next higher level.

3. Recursively pack these MBRs into nodes at the next level, proceeding upwards, until the root node is created.

The three algorithms differ only in how the rectangles are sorted at each level. These differences are described below.

Nearest-X (NX):

This algorithm was proposed in [51]. The rectangles are sorted by the x-coordinate of a designated point such as the center. Once sorted, the rectangles are packed into nodes, in groups of size B, using this ordering. While our description is in terms of x, a different coordinate can clearly be used.

Hilbert Sort (HS):

The algorithm of [28] orders the rectangles using the Hilbert space filling curve. The center points of the rectangles are sorted based on their distance from the origin, measured along the curve. This process determines the order in which the rectangles are placed into the nodes of the R-Tree.

Sort-Tile-Recursive (STR):

STR [36] is best described recursively with d = 2 providing the base case. (The case d = 1 is already handled well by regular B-trees.) Accordingly, we first consider a set of rectangles in the plane. The basic idea is to “tile” the data space using In/B vertical slices so that each slice contains enough rectangles to pack roughly In/B nodes. Once again we assume coordinates are for the center points of the rectangles. Determine the number of leaf level pages P = In/Bl and let S = I√P l. Sort the rectangles by x-coordinate and partition them into S vertical slices. A slice consists of a run of S · B consecutive rectangles from the sorted list. Note that the last slice may contain fewer than S · B rectangles. Now sort the rectangles of each slice by y-coordinate and pack them into nodes by grouping them into runs of length B (the first B rectangles into the first node, the next n into the second node, and so on).

The case d > 2 is is a simple generalization of the approach described above. First, sort the hyper-rectangles according to the first coordinate of their center. Then divide the input set into S = IP d l slabs, where a slab consists of a run of B · IP rectangles from the sorted list. Each slab is now processed recursively using the remaining d − 1 coordinates (i.e., treated as a (d − 1)-dimensional data set).

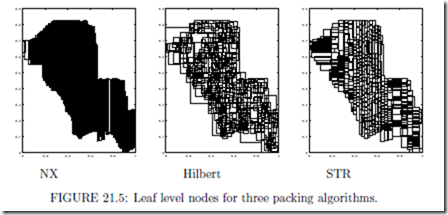

Figure 21.5 illustrates the results from packing a set of segments from a Tiger file corresponding to the city of Long Beach. The figure shows the resultant leaf level MBRs for the same data set for each of the three algorithms using a value of B = 100 to bulk load the trees.

As reported in [36], both Hilbert and STR significantly outperform NX packing on all types of data except point data, where STR and NX perform similarly. For tests conducted with both synthetic and actual data sets, STR outperformed Hilbert on all but one set, by factors of up to 40%. In one instance (VLSI data), Hilbert packing performed up to 10% faster. As expected, these differences decrease rapidly as the query size increases.

Comments

Post a Comment