Computational Biology:Introduction,Discovering Unusual Words and Comparing Whole Genomes.

Introduction

In the last fifteen years, biological sequence data have been accumulating at exponential rate under continuous improvement of sequencing technology, progress in computer science, and steady increase of funding. Molecular sequence databases (e.g., EMBL, Genbank, DDJB, Entrez, Swissprot, etc.) currently collect hundreds of thousand of sequences of nucleotides and amino acids from biological laboratories all over the world, reaching into the hundreds of gigabytes. Such an exponential growth makes it increasingly important to have fast and automatic methods to process, analyze, and visualize massive amounts of data.

The exploration of many computational problems arising in contemporary molecular biol- ogy has now grown to become a new field of Computer Science. A coarse selection would include sequence homology and alignment, physical and genetic mapping, protein folding and structure prediction, gene expression analysis, evolutionary trees construction, gene find- ing, assembly for shotgun sequencing, gene rearrangements and pattern discovery, among others.

In this chapter we focus the attention on the applications of suffix trees to computational biology. In fact, suffix trees and their variants are among the most used (and useful) data structures in computational biology.

Among their applications, we mention pattern discovery [4, 31, 36, 37], alignment of whole genomes [17, 18, 24], detection of exact tandem repeats [46], detection of approximate re- peats [27], fragment assembly [15], oligo and probe design [41, 42], etc.

Here we describe two applications. In the first, the use of suffix trees and DAWGs is essential in developing a linear-time algorithm for solving a pattern discovery problem. In the second, suffix trees are used to solve a challenging algorithmic problem: the alignment

of two or more complete genomes.

DAWGs.)

(See Chapters 29 and 30 for more on suffix trees and

Discovering Unusual Words

In the context of computational biology, “pattern discovery” refers to the automatic identification of biologically significant patterns (or motifs ) by statistical methods. These methods

originated from the work of R. Staden [45] and have been recently expanded in a subfield of data-mining. The underlying assumption is that biologically significant words show distinctive distributional patterns within the genomes of various organisms, and therefore they can be distinguished from the others. The reality is not too far from this hypothesis (see, for example, [11, 12, 19, 25, 35, 39, 40, 51, 52]), because during the evolutionary process, living organisms have accumulated certain biases toward or against some specific motifs in their genomes. For instance, highly recurring oligonucleotides are often found in correspondence to regulatory regions or protein binding sites of genes (see, e.g., [10, 21, 49, 50]). Vice versa, rare oligonucleotide motifs may be discriminated against due to structural constraints of genomes or specific reservations for global transcription controls (see, e.g., [20, 51]).

From a statistical viewpoint, over- and under-represented words have been studied quite rigorously (see, e.g., [44] for a review).

A substantial corpus of works has been also produced by the scientific community which studies combinatorics on words (see, e.g., [29, 30] and references therein). In the application domain, however, the “success story” of pattern discovery has been its ability to find previously unknown regulatory elements in DNA sequences (see, e.g., [48, 49]). Regulatory elements control the expression (i.e., the amount of mRNA produced) of the genes over time, as the result of different external stimuli to the cell and other metabolic processes that take place internally in the cell. These regulatory elements are typically, but not always, found in the upstream sequence of a gene. The upstream sequence is defined as a portion of DNA of 1-2Kbases of length, located upstream of the site that controls the start of transcription. The regulatory elements correspond to binding sites for the factors involved in the transcriptional process. The complete characterization of these elements is a critical step in understanding the function of different

genes, and the complex network of interaction between them.

The use of pattern discovery to find regulatory elements relies on a conceptual hypothesis that genes which exhibit similar expression patterns are assumed to be involved in the same biological process or functions. Although many believe that this assumption is an oversimplification, it is still a good working hypothesis. Co-expressed genes are therefore expected to share common regulatory domains in the upstream regions for the coordinated control of gene expression. In order to elucidate genes which are co-expressed as a result of a specific stress or condition, a DNA microarray experiment is typically designed. After collecting data from the microarray, co-expressed genes are usually obtained by clustering the time series corresponding to their expression profiles.

As said, words that occur unexpectedly often or rarely in genetic sequences have been variously linked to biological meanings and functions. With increasing availability of whole genomes, exhaustive statistical tables and global detectors of unusual words on a scale of millions, even billions of bases become conceivable. It is natural to ask how large such tables may grow with increasing length of the input sequence, and how fast they can be computed. These problems need to be regarded not only from the conventional perspective of asymptotic space and time complexities, but also in terms of the volumes of data produced and ultimately, of practical accessibility and usefulness. Tables that are too large at the outset saturate the perceptual bandwidth of the user, and might suggest approaches that sacrifice some modeling accuracy in exchange for an increased throughput.

The number of distinct substrings in a string is at worst quadratic in the length of that string. The situation does not improve if one restricts himself to computing and displaying the most unusual words in a given sequence. This presupposes comparing the frequency of occurrence of every word in that sequence with its expectation: a word that departs from expectation beyond some pre-set threshold will be labeled as unusual or surprising.

Departure from expectation is assessed by a distance measure often called a score function. The typical format for a z-score is that of a difference between observed and expected counts, usually normalized to some suitable moment. For most a priori models of a source, it is not difficult to come up with extremal examples of observed sequences in which the number of, say, over-represented substrings grows itself with the square of the sequence length.

An extensive study of probabilistic models and scores for which the population of po- tentially unusual words in a sequence can be described by tables of size at worst linear in the length of that sequence was carried out in [4]. That study not only leads to more palatable representations for those tables, but also supports (non-trivial) linear time and space algorithms for their constructions, as described in what follows. These results do not mean that the number of unusual words must be linear in the input, but just that their representation and detection can be made such. Specifically, it is seen that it suffices to consider as candidate surprising words only the members of an a priori well identified set of “representative” words, where the cardinality of that set is linear in the text length. By the representatives being identifiable a priori we mean that they can be known before any score is computed. By neglecting the words other than the representatives we are not ruling out that those words might be surprising. Rather, we maintain that any such word: (i) is embedded in one of the representatives, and (ii) does not have a bigger score or degree of surprise than its representative (hence, it would add no information to compute and give its score explicitly).

Statistical analysis of words

For simplicity of exposition, assume that the source can be modeled by a Bernoulli distribution, i.e., symbols are generated i.i.d., and that strings are ranked based on their number of occurrences (possibly overlapping). The results reported in the rest of this section can be extended to other models and counts (see [4]).

is the auto-correlation factor of y, that depends on the set P (y) of the lengths of the periods∗ of y.

Given fx(y), E(Zy ) and Var(Zy ), a statistical significance score that measures the degree of “unusual-ness” of a substring y must be carefully chosen. Ideally, the score function should be independent of the structure and size of the word. That would allow one to make meaningful comparisons among substrings of various compositions and lengths based on the value of the score.

There is some general consensus that z-scores may be preferred over other types of score function [28]. For any word w, a standardized frequency called z-score, can be defined by

If E(Zy ) and Var(Zy ) are known, then under rather general conditions, the statistics z(y) is asymptotically normally distributed with zero mean and unit variance as n tends to infinity. In practice E(Zy ) and Var(Zy ) are seldom known, but are estimated from the sequence under study.

Detecting unusual words

Consider now the problem of computing exhaustive tables reporting scores for all substrings of a sequence, or perhaps at least for the most surprising among them. While the complexity of the problem ultimately depends on the probabilistic model and type of count, a table for all words of any size would require at least quadratic space in the size of the input, not to mention that such a table would take at least quadratic time to be filled.

As seen towards the end of the section, such a limitation can be overcome by partitioning the set of all words into equivalence classes with the property, that it suffices to account for only one or two candidate surprising words in each class, while the number of classes is linear in the textstring size. More formally, given a score function z, a set of words C, and a real positive threshold T , we say that a word w ∈ C is T-over-represented in C (resp., T-under-represented ) if z(w) > T (resp., z(w) < −T ) and for all words y ∈ C we have z(w) ≥ z(y) (resp., z(w) ≤ z(y)). We say that a word w is T-surprising if z(w) > T or z(w) < −T . We also call max(C) and min(C) respectively the longest and the shortest word in C, when max(C) and min(C) are unique.

Let now x be a textstring and {C1, C2,... , Cl } a partition of all its substrings, where max(Ci ) and min(Ci) are uniquely determined for all 1 ≤ i ≤ l. For a given score z and a real positive constant T , we call O T the set of T -over-represented words of Ci, 1 ≤ i ≤ l, with respect to that score function. Similarly, we call U T the set of T -under-represented words of Ci, and S T the set of all T -surprising words, 1 ≤ i ≤ l.

For strings u and v = suz, a (u, v)-path is a sequence of words {w0 = u, w1, w2,..., wj = v}, l ≥ 0, such that wi is a unit-symbol extension of wi−1 (1 ≤ i ≤ j). In general a (u, v)- path is not unique. If all w ∈ C belong to some (min(Ci), max(Ci ))-path, we say that class C is closed.

A score function z is (u, v)-increasing (resp., non-decreasing) if given any two words w1, w2 belonging to a (u, v)-path, the condition |w1| < |w2| implies z(w1) < z(w2) (resp., z(w1) ≤ z(w2)). The definitions of a (u, v)-decreasing and (u, v)-non-increasing z-scores are symmetric. We also say that a score z is (u, v)-monotone when specifics are unneeded or understood. The following fact and its symmetric are immediate.

FACT 58.1 If the z-score under the chosen model is (min(Ci), max(Ci))-increasing, and Ci is closed, 1 ≤ i ≤ l, then

In [4], extensive results on the monotonicity of several scores for different probabilistic models and counts are reported. For the purpose of this chapter, we just need the following result.

THEOREM 58.1 (Apostolico et al. [4]) Let x be a text generated by a Bernoulli process, and pmax be the probability of the most frequent symbol.

Here, we pursue substring partitions {C1, C2,..., Cl } in forms which would enable us to restrict the computation of the scores to a constant number of candidates in each class Ci. Specifically, we require, (1) for all 1 ≤ i ≤ l, max(Ci) and min(Ci ) to be unique; (2) Ci to be closed, i.e., all w in Ci belong to some (min(Ci), max(Ci ))-path; (3) all w in Ci have the same count. Of course, the partition of all substrings of x into singleton classes fulfills those properties. In practice, l should be as small as possible.

We begin by recalling a few basic facts and constructs from, e.g., [9]. We say that two strings y and w are left-equivalent on x if the set of starting positions of y in x matches the set of starting positions of w in x. We denote this equivalence relation by ≡l. It follows from the definition that if y ≡l w, then either y is a prefix of w, or vice versa. Therefore, each class has unique shortest and longest word. Also by definition, if y ≡l w then fx(y) = fx(w).

Example 58.1

For instance, in the string ataatataataatataatatag the set {ataa, ataat, ataata} is a left-equivalent class (with position set {1, 6, 9, 14}) and so are {taa, taat, taata} and {aa, aat, aata}. We have 39 left-equivalent classes, much less than the total number of substrings, which is 22 × 23/2 = 253, and than the number of distinct substrings, in this case 61.

We similarly say that y and w are right-equivalent on x if the set of ending positions of y in x matches the set of ending positions of w in x. We denote this by ≡r . Finally, the equivalence relation ≡x is defined in terms of the implication of a substring of x [9, 16]. Given a substring w of x, the implication impx(w) of w in x is the longest string uwv such that every occurrence of w in x is preceded by u and followed by v. We write y ≡x w iff impx(y) = impx(w). It is not difficult to see that

LEMMA 58.1 The equivalence relation ≡x is the transitive closure of ≡l ∪ ≡r .

More importantly, the size l of the partition is linear in |x| = n for all three equivalence relations considered. In particular, the smallest size is attained by ≡x, for which the number of equivalence classes is at most n + 1.

Each one of the equivalence classes discussed can be mapped to the nodes of a corresponding automaton or word graph, which becomes thereby the natural support for the statistical tables. The table takes linear space, since the number of classes is linear in |x|.

The automata themselves are built by classical algorithms, for which we refer to, e.g., [5, 9] with their quoted literature, or easy adaptations thereof. The graph for ≡l, for instance, is the compact subword tree Tx of x, whereas the graph for ≡r is the DAWG, or Directed Acyclic Word Graph Dx, for x. The graph for ≡x is the compact version of the DAWG.

These data structures are known to commute in simple ways, so that, say, an ≡x-class can be found on Tx as the union of some left-equivalent classes or, alternatively, as the union of some right-equivalent classes. Beginning with left-equivalent classes, that correspond one-to-one to the nodes of Tx, we can build some right-equivalent classes as follows. We use the elementary fact that whenever there is a branching node µ in Tx, corresponding to w = ay, a ∈ Σ, then there is also a node ν corresponding to y, and there is a special suffix link directed from ν to µ. Such auxiliary links induce another tree on the nodes of Tx, that we may call Sx. It is now easy to find a right-equivalent class with the help of suffix links.

For this, traverse Sx bottom-up while grouping in a single class all strings such that their terminal nodes in Tx are roots of isomorphic subtrees of Tx. When a subtree that violates the isomorphism condition is encountered, we are at the end of one class and we start with a new one.

Example 58.2

For example, the three subtrees rooted at the solid nodes in Figure 58.1 correspond to the end-sets of ataata, taata and aata, which are the same, namely, {6, 11, 14, 19}. These three words define the right-equivalent class {ataata, taata, aata}. In fact, this class cannot be made larger because the two subtrees rooted at the end nodes of ata and tataata are not isomorphic to the subtree of the class. We leave it as an exercise for the reader to find all the right-equivalence classes on Tx. It turns out that there are 24 such classes in this example.

Subtree isomorphism can be checked by a classical linear-time algorithm by Aho et al. [3]. But on the suffix tree Tx this is done even more quickly once the f counts are available (see, e.g., [8, 23]).

LEMMA 58.2 Let T1 and T2 be two subtrees of Tx. T1 and T2 are isomorphic if and only if they have the same number of leaves and their roots are connected by a chain of suffix links.

If, during the bottom-up traversal of Sx, we collect in the same class strings such that

their terminal arc leads to nodes with the same frequency counts f , then this would identify and produce the ≡x-classes, i.e., the smallest substring partition.

Example 58.3

For instance, starting from the right-equivalent class C = {ataata, taata, aata}, one can augment it with of all words which are left-equivalent to the elements of C. The result is one ≡x-class composed by {ataa, ataat, ataata, taa, taat, taata, aa, aat, aata}.

Their respective pos sets are {1,6, 9,14}, {1,6, 9,14}, {1,6, 9,14}, {2,7, 10,15}, {2,7, 10,15}, {2,7, 10,15}, {3,8, 11,16}, {3,8, 11,16}, {3,8, 11,16}. Their respective endpos sets are {4,9, 12,17}, {5,10, 13,18}, {6,11, 14,19}, {4,9, 12,17}, {5,10, 13,18}, {6,11, 14,19}, {4,9, 12,17}, {5,10, 13,18}, {6,11, 14,19}. Because of Lemma 58.1, given two words y and w in the class, either they share the start set, or they share the end set, or they share the start set by transitivity with a third word in the class, or they share the end set by transitivity with a third word in the class. It turns out that there are only seven ≡x-classes in this example.

Note that the longest string in this ≡x-class is unique (ataata) and that it contains all the others as substrings. The shortest string is unique as well (aa). As said, the number of occurrences for all the words in the same class is the same (4 in the example). Figure 58.2 illustrates the seven equivalence classes for the running example. The words in each class have been organized in a lattice, where edges correspond to extensions (or contractions) of a single symbol. In particular, horizontal edges correspond to right extensions and vertical edges to left extensions.

While the longest word in an ≡x-class is unique, there may be in general more than one shortest word. Consider, for example, the text x = ak gk, with k > 0. Choosing k = 2 yields a class which has three words of length two as minimal elements, namely, aa, gg, and ag. (In fact, impx(aa) = impx(gg) = impx(ag) = aagg.) Taking instead k = 1, all three

substrings of x = ag coalesce into a single class which has two shortest words.

Recall that by Lemma 58.1 each ≡x-class C can be expressed as the union of one or more left-equivalent classes. Alternatively, C can be also expressed as the union of one or more right-equivalent classes. The example above shows that there are cases in which left- or right-equivalent classes cannot be merge without violating the uniqueness of the shortest word. Thus, we may use the ≡x-classes as the Ci’s in our partition only if we are interested in detecting over-represented words. If under-represented words are also wanted, then we must represent a same ≡x-class once for each distinct shortest word in it.

It is not difficult to accommodate this in the subtree merge procedure. Let p(u) denote the parent of u in Tx. While traversing Sx bottom-up, merge two nodes u and v with the same f count if and only if u and v are connected by a suffix link and so are p(u) and p(v).

This results in a substring partition slightly coarser than ≡x. It will be denoted by ≡˜x. In conclusion, we can state the following fact.

FACT 58.2 Let {C1, C2,..., Cl} be the set of equivalence classes built on the equivalence relation ≡˜x on the substrings of text x. Then, for all 1 ≤ i ≤ l,

1. max(Ci) and min(Ci) are unique

2. all w ∈ Ci are on some (min(Ci), max(Ci))-path

3. all w ∈ Ci have the same number of occurrences fx(w)

We are now ready to address the computational complexity of our constructions. In [5], linear-time algorithms are given to compute and store expected value E(Zw ) and variance Var(Zw ) for the number of occurrences under Bernoulli model of all prefixes of a given string. The crux of that construction rests on deriving an expression of the variance (see Expression 58.1) that can be cast within the classical linear time computation of the “failure function” or smallest periods for all prefixes of a string (see, e.g., [3]). These computations are easily adapted to be carried out on the linked structure of graphs such as Sx or Dx, thereby yielding expectation and variance values at all nodes of Tx, Dx, or the compact variant of the latter. These constructions take time and space linear in the size of the graphs, hence linear in the length of x. Combined with the monotonicity results this yields immediately:

The algorithm is implemented in a software tool called Verbumculus that can be found at http://www.cs.ucr.edu/∼stelo/Verbumculus/. A description of the tool and a demonstration of its applicability to the analysis of biological datasets will appear in [7].

Comparing Whole Genomes

As of today (mid 2003), Genbank contains “complete” genomes for more that 1,000 viruses, over 100 microbes, and about 100 eukariota. The abundance of complete genome sequences has given an enormous boost to comparative genomics. Association studies are emerging as a powerful tool for the functional identification of genes and molecular genetics has begun to reveal the biological basis of diversity. Comparing the genomes of related species gives us new insights into the complex structure of organisms at the DNA-level and protein-level.

The first step when comparing genomes is to produce an alignment, i.e., a collinear ar- rangement of sequence similarities. Alignment of nucleic or amino acid sequences has been one of the most important methods in sequence analysis, with much dedicated research and now many sophisticated algorithms available for aligning sequences with similar regions. These require assigning a score to all the possible alignments (typically, the sum of the similarity/identity values for each aligned symbol, minus a penalty for the introduction of gaps), along with a dynamic programming method to find optimal or near-optimal alignments according to this scoring scheme (see, e.g., [34]).

These dynamic programming methods run in time proportional to the product of the length of the sequences to be aligned. Hence they are not suitable for aligning entire genomes. Recently several genome alignment programs have been developed, all using an anchor-based method to compute an alignment (for an overview see [13]). An anchor is an exact match of some minimum length occurring in all genomes to be aligned (see Figure 58.3). The anchor-based method can roughly be divided

into the following three phases:

(1) Computation of all potential anchors.

(2) Computation of an optimal collinear sequence of non-overlapping potential an-chors: these are the anchors that form the basis of the alignment (see Figure58.4).

(3) Closure of the gaps in between the anchors.

In the following, we will focus on phase (1) and explain two algorithms to compute potential anchors. The first algorithm allows one to align more than two genomes, while

the second is limited to two genomes, but uses less space than the former. Both algorithms are based on suffix trees. For phase (2) of the anchor-based method, one uses methods from computational geometry. The interest reader is referred to the algorithms described in [1, 2, 33, 54]. In phase (3), one can apply any standard alignment methods, or even the same anchor-based method with relaxed parameters.

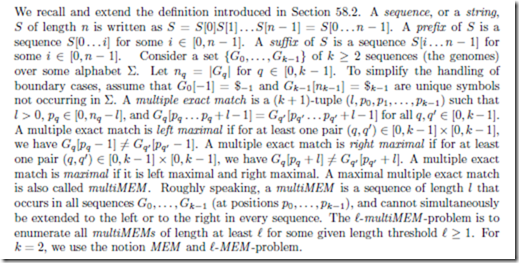

Basic Definitions

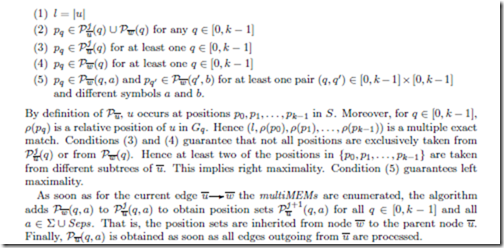

Each combination of position sets requires to enumerate the following cartesian product:

The enumeration is done in three steps, as follows. In a first step one enumerates all possible k-tuples (P0, P1,... , Pk−1) of non-empty sets where each Pq is either Pu(q) or Pw (q). Such a k-tuple is called father/child choice, since it specifies to either choose a position from the father u (a father choice) or from the child w (a child choice). One rejects the two k-tuples specifying only father choices or only child choices and process the remaining father/child choices further. In the second step, for a fixed father/child choice (P0, P1,... , Pk−1) one enumerates all possible k-tuples (a0,..., ak−1) (called symbol choices) such that Pq (aq ) /= ∅.

At most |Σ| symbol choices consisting of k identical symbols (this can be decided in constant time) are rejected. The remaining symbol choices are processed further. In the third step, for a fixed symbol choice (a0,..., ak−1) we enumerate all possible k-tuples (p0,..., pk−1) such that pq ∈ Pq (aq ) for q ∈ [0,k − 1]. By construction, each of these k-tuples represents a multiMEM of length l. The cartesian product (58.2) thus can be enumerated in O(k) space and in time proportional to its size.

The suffix tree construction and the bottom-up traversal (without handling of position sets) requires O(n) time. Thus the algorithm described here runs in O(|Σ|kn) space and O(|Σ|kn + z) time where z is the number of multiMEMs. It is still unclear if there is an algorithm which avoids the factors |Σ| or k in the space or time requirement.

Space efficient computation of MEMs for two genomes

The algorithm computes all MEMs of length at least f by constructing the suffix tree of G0 and matches G1 against it. The matching process delivers substrings of G1 represented by locations in the suffix tree, where a location is defined as follows: Suppose a string u occurs in ST(G0). If there is a branching node u, then u is the location of u. If v is the branching node of maximal depth, such that u = vw for some non-empty string w, then (v, w) is the location of u. The location of u is denoted by loc(u).

ST(G0) represents all suffixes Ti = G0[i ... n0 − 1]$0 of G0$0. The algorithm processes G1 suffix by suffix from longest to shortest. In the jth step, the algorithm processes suffix Rj = G1[j ... n1 − 1]$2 and computes the locations of two prefixes pj and pj of Rj defined as follows:

the depth first traversal, the lcp-stack allows to determine in constant time the length of the longest common prefix of Ti and Rj . As a consequence, the depth first traversal requires time proportional to the size of the subtree. Thus the total time for all depth first traversals of the subtrees below loc(pj ), j ∈ [0, n1 − 1], is O(r).

Altogether, the algorithm described here runs in O(n0 + n1 + r) time and O(n0) space. It is implemented as part of the new version of the MUMmer genome alignment program, see http://www.tigr.org/Software/mummer.

Comments

Post a Comment