Searching and Priority Queues in o(log n) Time:Overview

The basic purpose of this chapter is to introduce some of the basic techniques and give references to recent development:

• We start by presenting some simple data structures, which allow us to explain how the “information-theoretic O(log n) barrier” bay be surpassed. These data structures use a two-step approach: First, range reduction is used to decrease key length, such that we only need to consider keys that are much shorter than w. Secondly, we treat these short keys efficiently by packed computing where many keys are packed together in words.

• Next, we discuss some more elaborate data structures. In particular, we show how to achieve low worst-case complexity in linear space.

– The fusion tree, the first data structure presented that achieved sublogarithmic complexity.

– The exponential search tree, which achieves tight worst-case bound on dynamic ordered dictionaries.

• We also give references to recent results on efficient priority queue implementations and sorting.

Achieving Sub-Logarithmic Time per Element by Simple Means In this section, we show that it is surprisingly simple to achieve a sublogarithmic complexity in n independent of w, which implies sorting and searching asymptotically faster than comparison-based algorithms.

We use indirect addressing and large arrays. As a consequence, the data structures will need much space. However, all algorithms presented here can be fit into linear space with randomization (i.e. with universal hashing [11]).

In some cases, we will consider keys that are shorter than w, we will then use b or k to denote key length.

In this section, we will use F (n, b) to express the complexity of searching, as specified below.

DEFINITION 39.2 Let F (n, b) be the worst-case cost of performing one search or update in an ordered dictionary storing n keys of length b.

Unless we use hashing to obtain linear space, the methods discussed in this section can all be implemented with a simple instruction set. All necessary instructions are standard, they are even in AC0. (An instruction is in AC0 if it is implementable by a constant depth, unbounded fan-in (AND,OR,NOT)-circuit of size wO(1) . An example of a non-AC0 instruction is multiplication [9].)

Range Reduction

One way to simplify a computational problem is by range reduction. In this case, we reduce the problem of dealing with w-bit keys to that of dealing with k-bits keys, k < w.

Assume that we view our w-bit keys as consisting of two w/2-bit characters and store these in a trie of height 2. Each internal node in the trie contains

• a reference to the min-element below the node; the min-element is not stored in any subtrie;

• a table of subtries, where each existing subtrie is represented by a w/2-bit key;

• a data structure for efficient neighbour search among the w/2-bit keys representing the subtries.

Since each node except the root has one incoming edge and each node contains exactly one element (the min-element), the trie has exactly n nodes and n − 1 edges.

We make neighbour searches in the following way: Traverse down the trie. If we find a leaf, the search ends, otherwise we end up at an empty entry in the subtrie table of some node. By making a neighbour search in that node, we are done. The cost for traversing the trie is O(1) and the cost for a local neighbour search is O(F (n, b/2)) by definition.

The space requirements depend on how the table of subtrie pointers is implemented. If the table is implemented as an array of length 2b/2, each node in the trie requires Θ(2b/2) space. If we instead represent each table as a hash table, the total space of all hash tables

is proportional to the total number of edges in the trie, which is n − 1.

We summarize this in the following equation.

F (n, w) = O(1) + F (n, w/2). (39.1)

We can use the same construction recursively. That is, the local data structure for neighbour search among w/2-bit keys can be a trie of height 2 where w/4 bits are used for branching, etc.

In order to apply recursion properly, we have to be a bit careful with the space consumption. First, note that if the number of edges in a trie with n elements was larger than n, for instance 2n, the total space (number of edges) would grow exponentially with the number of recursive levels. Therefore, we need to ensure that the number of edges in a trie is not just O(n) but actually at most n. This is the reason why each node contains a min-element;

in this way we guarantee that the number of edges is n − 1.

Secondly, even when we can guarantee that the space per recursive level does not increase, we are still faced with Θ(n) space (with hashing) per level. If we use more than a constant number of levels, this will require superlinear space. This is handled in the following way: When we apply the recursive construction r times, we only keep a small part of the elements in the recursive structure. Instead, the elements are kept in sorted lists of size Θ(r), and we keep only the smallest element from each list in our recursive trie. When searching for a key, we first search for its list in the recursive trie structure, we then scan the list. Insertions and deletions are made in the lists, and the sizes of the lists are maintained by merging and splitting lists. Now, the total space taken by each level of the recursive trie construction is Θ(n/r) and the total space for r recursive levels is Θ(n). Searching, splitting and merging within the lists only takes O(r) time. In summary, setting r = log(w/k) we get the following lemma.

LEMMA 39.1 F (n, w) = O(log(w/k)) + F (n, k)

This recursive reduction was first used in van Emde Boas trees [20, 25–27].

Packing Keys

If the word length is small enough—as in today’s computers—the range reduction technique discussed above will decrease the key length to a constant at a low cost. However, in order to make a really convincing comparison between comparison-based algorithms and algorithms based on indirect addressing, we must make the complexity independent of the word size. This can be done by combining range reduction with packed computation. The basic idea behind packed computation is to exploit the bit-parallelism in a computer; many short keys can be packed in a word and treated simultaneously.

The central observation is due to Paul and Simon [21]; they observed that one subtraction can be used to perform comparisons in parallel. Assume that the keys are of length k. We may then pack Θ(w/k) keys in a word in the following way: Each key is represented by a (k + 1)-bit field. The first (leftmost) bit is a test bit and the following bits contain the key, cf. Figure 39.1. Let X and Y be two words containing the same number of packed keys, all test bits in X are 0 and all test bits in Y are 1. Let M be a fixed mask in which all test bits are 1 and all other bits are 0. Let

R ← (Y − X) and M. (39.2)

Then, the ith test bit in R will be 1 if and only if yi > xi. All other test bits, as well as all other bits, in R will be 0.

We use packed comparisons to achieve the following result.

Proof (Sketch) We use a packed B-tree [2].

Th packed B-tree has nodes of degree Θ(w/k). In each node, the search keys are packed together in a single word, in sorted order from left to right. When searching for a k-bit key x in a packed B-tree, we take the following two steps:

1. We construct a word X containing multiple copies of the query key x. X is created by a simple doubling technique: Starting with a word containing x in the rightmost part, we copy the word, shift the copy k + 1 steps and unite the words with a bitwise or. The resulting word is copied, shifted 2k + 2 steps and united, etc. Altogether X is generated in O(log(w/k)) time.

2. After the word X has been constructed, we traverse the tree. At each node, we compute the rank of x in constant time with a packed comparison. The cost of the traversal is proportional to the height of the tree, which is O(log n/ log(w/k)).

A packed comparison at a node is done as in Expression 39.2. The keys in the B-tree node are stored in Y and X contains multiple copies of the query key. After subtraction and masking, the rightmost p test bits in R will be 1 if and only if there are p keys in Y which are greater than x. This is illustrated in Figure 39.1. Hence, by finding the position of the leftmost 1-bit in R we can compute the rank of x among the keys in Y . In order to find the leftmost key, we can simply store all possible values of R in a lookup table. Since the number of possible values equals the number of keys in a B-tree node plus one, a hash table implementation of this lookup table would require only Θ(w/k) space.

Above, we omitted a lot of details, such as how to perform updates and how pointers within a packed B-tree are represented. Details can be found in [2].

Combining

We can now derive our first bounds for searching. First, we state bounds in terms of w. The following bound holds for searching [25–27]:

THEOREM 39.1 F (n, w) = O(log w).

Proof (Sketch) Apply Lemma 39.1 with k = 1.

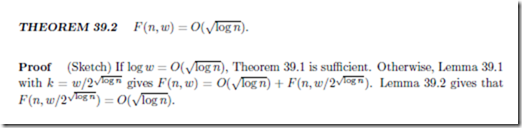

Next, we show how to remove the dependency of word length [2]:

FIGURE 39.2: Searching in the internal trie in a fusion tree node. Horizontal lines represent significant bit positions. The thin paths represent the 6 keys in the trie, while the fat path represents the search key x (which is not present in the trie). The arrow marks the position of the compressed key xl among the keys in the trie.

Comments

Post a Comment