Computational Geometry,Proximity and Location:Introduction and Point Location

Introduction

Proximity and location are fundamental concepts in geometric computation. The term proximity refers informally to the quality of being close to some point or object. Typical problems in this area involve computing geometric structures based on proximity, such as the Voronoi diagram, Delaunay triangulation and related graph structures such as the relative neighborhood graph. Another class of problems are retrieval problems based on proximity. These include nearest neighbor searching and the related concept of range searching. (See Chapter 18 for a discussion of data structures for range searching.) Instances of proximity structures and proximity searching arise in many fields of applications and in many dimensions. These applications include object classification in pattern recognition, document analysis, data compression, and data mining.

The term location refers to the position of a point relative to a geometric subdivision or a given set of disjoint geometric objects. The best known example is the point location problem, in which a subdivision of space into disjoint regions is given, and the problem is to identify which region contains a given query point. This problem is widely used in areas such as computer graphics, geographic information systems, and robotics. Point location is also used as a method for proximity searching, when applied in conjunction with Voronoi diagrams.

In this chapter we will present a number of geometric data structures that arise in the context of proximity and location. The area is so vast that our presentation will be limited to a relatively few relevant results. We will discuss data structures for answering point location queries first. After this we will introduce proximity structures, including Voronoi diagrams and Delaunay triangulations. Our presentation of these topics will be primarily restricted to the plane. Finally, we will present results on multidimensional nearest neighbor searching.

Point Location

The planar point location problem is one of the most fundamental query problems in computational geometry. Consider a planar straight line graph S. (See Chapter 17 for details.) This is an undirected graph, drawn in the plane, whose edges are straight line segments that have pairwise disjoint interiors. The edges of S subdivide the plane into (possibly unbounded) polygonal regions, called faces. Henceforth, such a structure will be referred to as a polygonal subdivision. Throughout, we let n denote the combinatorial complexity of S, that is, the total number of vertices, edges and faces. (We shall occasionally abuse notation and use n to refer to the specific number of vertices, edges, or faces of S.) A planar subdivision is a special case of the more general topological concept of a cell complex [35], in which vertices, edges, and generally faces of various dimensions are joined together so that the intersection of any two faces is either empty or is a face of lower dimension.

The point location problem is to preprocess a polygonal subdivision S in the plane into a data structure so that, given any query point q, the polygonal face of the subdivision containing q can be reported quickly. (In Figure 63.1(a), face A would be reported.) This problem is a natural generalization of the binary search problem in 1-dimensional space, where the faces of the subdivision correspond to the intervals between the 1-dimensional key values. By analogy with the 1-dimensional case, the goal is to preprocess a subdivision into a data structure of size O(n) so that point location queries can be answered in O(log n) time.

A slightly more general formulation of the problem, which is applicable even when the input is not a subdivision is called vertical ray shooting. A set S of line segments is given with pairwise disjoint interiors. Given a query point q, the problem is to determine the line segment of S that lies vertically below q. (In Figure 63.1(b), the segment s would be reported.) If the ray hits no segment, a special value is returned. When S is a polygonal subdivision, point location can be reduced to vertical ray shooting by associating each edge of S with the face that lies immediately above it.

Kirkpatrick’s Algorithm

Kirkpatrick was the first to present a simple point location data structure that is asymptotically optimal [52]. It answers queries in O(log n) time using O(n) space. Although this is not the most practical approach to point location, it is quite easy to understand.

Kirkpatrick starts with the assumption that the planar subdivision has been refined (through the addition of O(n) new edges and vertices) so that it is a triangulation whose external face is a triangle. Let T0 denote this initial triangulation subdivision. Kirkpatrick’s method generates a finite sequence of increasingly coarser triangulations, (T0, T1, T2,... , Tm), where Tm consists of the single triangle forming the outer face of the original triangulation.

This sequence satisfies the following constraints: (a) each triangle of Ti+1 intersects a constant number of triangles of Ti, and (b) the number of vertices of Ti+1 is smaller than the number of vertices of Ti by a constant fraction. (See Figure 63.2.) The data structure itself is a rooted DAG (directed acyclic graph), where the root of the structure corresponds to the single triangle of Tm, and the leaves correspond to the triangles of T0. The interior nodes of the DAG correspond to the triangles of each of the triangulations. A directed edge connects each triangle in Ti+1 with each triangle in Ti that it overlaps.

Given a query point q, the point location query proceeds level-by-level through the DAG, visiting the nodes corresponding to the triangles that contain q. By property (a), each triangle in Ti+1 overlaps a constant number of triangles of Ti, which implies that it is possible to descend one level in the data structure in O(1) time. It follows that the running time is proportional to the number of levels in the tree. By property (b), the number of vertices decreases at each level by a fixed constant fraction, and hence, the number of levels is O(log n). Thus the overall query time is O(log n).

Kirkpatrick showed how to build the data structure by constructing a sequence of triangulations satisfying the above properties. Kirkpatrick’s approach is to compute an independent set of vertices (that is, a set of mutually nonadjacent vertices) in Ti where each vertex of the independent set has constant degree. (An example is shown at the top of Figure 63.2. The vertices of the independent set are highlighted.) The three vertices of the outer face are not included. Kirkpatrick showed that there exists such a set whose size is a constant fraction of the total number of vertices, and it can be computed in linear time. These vertices are removed along with any incident edges, and the resulting “holes” are then retriangulated. Kirkpatrick showed that the two properties hold for the resulting sequence of triangulations.

Slab-Based Methods and Persistent Trees

Many point location methods operate by refining the given subdivision to form one that is better structured, and hence, easier to search. One approach for generating such a refinement is to draw a vertical line through each vertex of the subdivision. These lines partition the plane into a collection of O(n) vertical slabs, such that there is no vertex within each slab. As a result, the intersection of the subdivision with each slab consists of a set of line segments, which cut clear through the slab. These segments thus partition the slab into a collection of disjoint trapezoids with vertical sides. (See Figure 63.3.)

Point location queries can be answered in O(log n) time by applying two binary searches. The first search accesses the query point’s x coordinate to determine the slab containing the query point. The second binary search tests whether the query point lies above or below individual lines of the slab, in order to determine which trapezoid contains the query point. Since each slab can be intersected by at most n lines, this second search can be done in O(log n) time as well.

A straightforward implementation of this method is not space efficient, since there are Ω(n) slabs,∗ each having up to Ω(n) intersecting segments, for a total of Ω(n2) space.

However, adjacent slabs are very similar, since the only segments that change are those that are incident to the vertices lying on the slab boundary. Sarnak and Tarjan [67] exploited this idea to produce an optimal point location data structure. To understand their algorithm, imagine sweeping a line segment continuously from left to right. Consider the sorted order of subdivision line segments intersecting this sweep line. Whenever the sweep line encounters a vertex of the subdivision, the edges incident to this vertex lying to the left of the vertex are removed from the sweep-line order and incident edges to the right of the vertex are inserted. Since every edge is inserted once and deleted once in this process, the total number of changes over the entire sweep process is O(n).

Sarnak and Tarjan proposed maintaining a persistent variant of the search tree. A per- sistent search tree is a dynamic search tree (supporting insertion and deletion) which can answer queries not only to the current tree, but to any of the previous versions in the history of the tree’s lifetime as well.

(See Chapter 31.)

In this context, the history of changes to the search tree is maintained in a left to right sweep of the plane. The persistent search tree supports queries to any of these trees, that is, in any of the slabs, in O(log n) time. The clever aspect of Sarnak and Tarjan’s tree is that it can be stored in O(n) total space (as opposed to O(n2) space, which would result by generating O(n) copies of the tree). This is done by a method called limited node copying. Thus, this provides an asymptoti- cally optimal point location algorithm. A similar approach was discovered independently by Cole [29].

Separating Chains and Fractional Cascading

Slab methods use vertical lines to help organize the search. An alternative approach, first suggested by Lee and Preparata [55], is to use a divide-and-conquer approach based on a hierarchy of monotone polygon chains, called separating chains. A simple polygon is said to be x-monotone if the intersection of the interior of the polygon with a vertical line is connected. An x-monotone subdivision is one in which all the faces are x-monotone. The separating chain method requires that the input be an x-monotone subdivision. Fortunately, it is possible to convert any polygonal subdivision in the plane into an x-monotone subdivision in O(n log n) time, through the addition of O(n) new edges.

(See, for example, [31, 55, 64].)

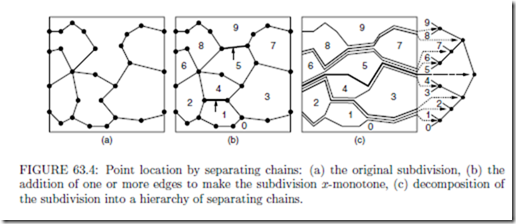

For example, Figure 63.4(a) shows a subdivision that is not x-monotone, but the addition of two edges suffice to produce an x-monotone subdivision shown in Figure 63.4(b).

Consider an x-monotone subdivision with n faces. It is possible to order the faces f0, f1,... , fn−1 such that if i < j, then every vertical line that intersects both of these faces intersects fi below fj . (See Figure 63.4(b).) For each i, 0 < i < n, define the ith separating chain to be the x-monotone polygonal chain separating faces whose indices are less than i from those that are greater than or equal to i.

Observe that, given a chain with m edges, it is possible to determine whether a given query point lies above or below the chain in O(log m) time, by first performing a binary search on the x-coordinates of the chain, in order to find which chain edge overlaps the query point, and then determining whether the query point lies above or below this edge in O(1) time. The separating chain method works intuitively by performing a binary search on these chains. The binary search can be visualized as a binary tree imposed on the chains, as shown in Figure 63.4(c).

Although many chains traverse the same edge, it suffices to store each edge only once in the structure, namely with the chain associated with the highest node in the binary tree. This is because once a discrimination of the query point is made with respect to such an edge, its relation is implicitly known for all other chains that share the same edge. It follows that the total space is O(n).

As mentioned above, at each chain the search takes logarithmic time to determine whether the query point is above or below the chain. Since there are Ω(n) chains, this would lead to an Ω(log2 n) algorithm [55]. There is a clever way to reduce the search time to O(log n), through the use of a simple and powerful method called fractional cascading [24, 36]. Intuitively, fractional cascading seeks to replace a sequence of independent binary searches with a more efficient sequence of coordinated searches. After searching through a parent’s chain, it is known which edge of this chain the query point overlaps. Thus, it is not necessary to search the entire range of x-coordinates for the child’s chain, just the sublist of x-coordinates that overlap this interval.

However, in general, the number of edges of the child’s chain that overlaps this interval may be as large as Ω(n), and so this observation would seem to be of no help. In fractional cascading, this situation is remedied by augmenting each list. Starting with the leaf level, the x-coordinate of every fourth vertex is passed up from each child’s sorted list of x-coordinates and inserted into its parent’s list. This is repeated from the parent to the grandparent, and so on. After doing this, once the edge of the parent’s chain that overlaps the query point has been determined, there can be at most four edges of the child’s chain that overlap this interval. (For example, in Figure 63.5 the edge pq is overlapped by eight edges at the next lower level. After cascading, it is broken into three subedges, each of which overlaps at most four edges at the next level.) Thus, the overlapping edge in the child’s chain can be found in O(1) time. The root requires O(log n) time, and each of the subsequent O(log n) searches can be performed in O(1) additional time. It can be shown that this augmentation of the lists increases the total size of all the lists by at most a constant factor, and hence the total space is still O(n).

Trapezoidal Maps and the History Graph

Next we describe a randomized approach for point location. It is quite simple and practical. Let us assume that the planar subdivision is presented simply as a set of n line segments S = {s1, s2,..., sn} with pairwise disjoint interiors. The algorithm answers vertical ray-shooting queries as described earlier. This approach was developed by Mulmuley [60]. Also see Seidel [68].

The algorithm is based on a structure called a trapezoidal map (or trapezoidal decompo- sition). First, assume that the entire domain of interest is enclosed in a large rectangle. Imagine shooting a bullet vertically upwards and downwards from each vertex in the polyg- onal subdivision until it hits another segment of S. To simplify the presentation, we shall assume that the x-coordinates of no two vertices are identical. The segments of S together with the resulting bullet paths subdivide the plane into O(n) trapezoidal cells with vertical sides, which may degenerate to triangles. (See Figure 63.6(a).)

For the purposes of point location, the trapezoidal map is created by a process called a randomized incremental construction. The process starts with the initial bounding rectangle (that is, one trapezoid) and then the segments of S are inserted one by one in random order. As each segment is added, the trapezoidal map is updated by “walking” the segment through the subdivision, and updating the map by shooting new bullet paths through the segments endpoints and trimming existing paths that hit the new segment. See [31, 60, 68] for further details. The number of changes in the diagram with each insertion is proportional to the number of vertical segments crossed by the newly added segment, which in the worst case may be as high as Ω(n). It can be shown, however, that on average each insertion of a new segment results in O(1) changes. This is true irrespective of the distribution of the segments, and the expectation is taken over all possible insertion orders.

The point location data structure is based on a rooted directed acyclic graph, or DAG, called the history DAG. Each node has either two outgoing edges (internal nodes) or none (leaves). Leaves correspond one-to-one with the cells of the trapezoidal map. There are two types of internal nodes, x-nodes and y-nodes. Each x-node contains the x-coordinate x0 of an endpoint of one of the segments, and its two children correspond to the points lying to the left and to the right of the vertical line x = x0. Each y-node contains a pointer to a line segment of the subdivision. The left and right children correspond to whether the query point is above or below the line containing this segment, respectively. (In Figure 63.7, x-nodes are shown as circles, y-nodes as hexagons, and leaves as squares.)

As with Kirkpatrick’s algorithm, the construction of the point location data structure encodes the history of the randomized incremental construction. Let (T0, T1,..., Tn) denote the sequence of trapezoidal maps that result through the randomized incremental process. The point location structure after insertion of the ith segment has one leaf for each trapezoid in Ti. Whenever a segment is inserted, the leaf nodes corresponding to trapezoids that were destroyed are replaced with internal x- and y-nodes that direct the search to the location of the query point in the newly created trapezoids, after the insertion. (This is illustrated in Figure 63.7.) Through the use of node sharing, the resulting data structure can be shown to have expected size O(n), and its expected depth is O(log n), where the expectation is over all insertion orders. Details can be found in [31, 60, 68].

Worst- and Expected-Case Optimal Point Location

Goodrich, Orletsky and Ramaiyer [43] posed the question of bounding the minimum number of comparisons required, in the worst case, to answer point location queries in a subdivision of n segments. Adamy and Seidel [1] provided a definitive answer by showing that point location queries can be answered with log2 n + 2;log2 n + o(√log n) primitive comparisons.

They also gave a similar lower bound.

Another natural question involves the expected-case complexity of point location. Given a polygonal subdivision S, assume that each cell z ∈ S is associated with the probability pz that a query point lies in z. The problem is to produce a point location data structure whose expected search time is as low as possible. The appropriate target bound on the number of comparisons is given by the entropy of the subdivision, which is denoted by H and defined:

In the 1-dimensional case, a classical result due to Shannon implies that the expected number of comparisons needed to answer such queries is at least as large as the entropy of the probability distribution [53, 71]. Mehlhorn [58] showed that in the 1-dimensional case it is possible to build a binary search tree whose expected search time is at most H + 2.

Arya, Malamatos, and Mount [5, 6] presented a number of results on this problem in the planar case, and among them they showed that for a polygonal subdivision of size n in which each cell has constant combinatorial complexity, it is possible to answer point location queries with H + o(H) comparisons in the expected case using space that is nearly linear in n. Their results also applied to subdivisions with convex cells, assuming the query distribution is uniform within each cell. Their approach was loosely based on computing a binary space partition (BSP) tree (see Chapter 20) satisfying two properties:

(a) The entropy of the subdivision defined by the leaves of the BSP should be close to the entropy of the original subdivision.

(b) The depth of a leaf should be close to log2(1/p), where p is the probability that a query point lies within the leaf.

Arya, Malamatos, and Mount [7] also presented a simple weighted variant of the randomized incremental algorithm and showed that it can answer queries in O(H) expected time and O(n) space. Iacono [48] presented a deterministic weighted variant based on Kirkpatrick’s algorithm.

Comments

Post a Comment