Elimination Structures in Scientific Computing:Applications of Etrees

Applications of Etrees

Efficient Symbolic Factorization

Symbolic factorization (or symbolic elimination) is a process that computes the nonzero structure of the factors of a matrix without computing the numerical values of the nonzeros. The symbolic Cholesky factor of a matrix has several uses. It is used to allocate the data structure for the numeric factor and annotate it with all the row/column indices, which enables the removal of most of the non-numeric operations from the inner-most loop of the subsequent numeric factorization [20, 29]. It is also used to compute relaxed supernode (or amalgamated node) partitions, which group columns into supernodes even if they only have approximately the same structure [4, 21]. Symbolic factors can also be used in algorithms that construct approximate Cholesky factors by dropping nonzeros from a matrix A and factoring the resulting, sparser matrix B [6, 72]. In such algorithms, elements of A that are dropped from B but which appear in the symbolic factor of B can can be added to the matrix B; this improves the approximation without increasing the cost of factoring B. In all of these applications a supernodal symbolic factor (but not a relaxed one) is sufficient; there is no reason to explicitly represent columns that are known to be identical.

The following algorithm for symbolically factoring a symmetric matrix A is due to George and Liu [28] (and in a more graph-oriented form due to [64]; see also [29, Section 5.4.3] and [54, Section 8]).

The algorithm uses the elimination tree implicitly, but does not require it as input; the algorithm can actually compute the elimination tree on the fly. The algorithm uses the observation that

That is, the structure of a column of L is the union of the structure of its children in the elimination tree and the structure of the same column in the lower triangular part of A. Identifying the children can be done using a given elimination tree, or the elimination tree can be constructed on the fly by adding column i to the list of children of pi when the structure of i is computed (pi is the row index of the first subdiagonal nonzero in column i of L). The union of a set of column structures is computed using a boolean array P of size n (whose elements are all initialized to false), and an integer stack to hold the newly created structure. A row index k from a child column or from the column of A is added to the stack only if P[k] = false. When row index k is added to the stack, P[k] is set to true to signal that k is already in the stack. When the computation of hadj+(j) is completed, the stack is used to clear P so that it is ready for the next union operation. The total work in the algorithm is Θ(|L|), since each nonzero requires constant work to create and constant work to merge into the parent column, if there is a parent. (Here |L| denotes the number of nonzeros in L, or equivalently the number of edges in the filled graph G+ (A); similarly |A| denotes the number of nonzeros in A, or the number of edges in the initial graph G(A).)

The symbolic structure of the factor can usually be represented more compactly and computed more quickly by exploiting supernodes, since we essentially only need to represent the identity of each supernode (the constituent columns) and the structure of the first (lowest numbered) column in each supernode. The structure of any column can be computed from this information in time proportional to the size of the column. The George-Liu columnmerge algorithm presented above can compute a supernodal symbolic factorization if it is given as input a supernodal elimination tree; such a tree can be computed in O(|A|) time by the Liu-Ng-Peyton algorithm [56]. In practice, this approach saves a significant amount of work and storage.

Clearly, column-oriented symbolic factorization algorithms can also generate the structure of rows in the same asymptotic work and storage. But a direct symbolic factorization by rows is less obvious. Whitten [73], in an unpublished manuscript cited by Tarjan and Yannakakis [71], proposed a row-oriented symbolic factorization algorithm (see also [51] and [54, Sections 3.2 and 8.2]). The algorithm uses the characterization of the structure of row i in L as the row subtree Tr (i). Given the elimination tree and the structure of A by rows, it is trivial to traverse the ith row subtree in time proportional to the number of nonzeros in row i of L. Hence, the elimination tree along with a row-oriented representation of A is an effective implicit symbolic row-oriented representation of L; an explicit representation is usually not needed, but it can be generated in work and space O(|L|) from this implicit representation.

Predicting Row and Column Nonzero Counts

In some applications the explicit structure of columns of L is not required, only the number of nonzeros in each column or each row. Gilbert, Ng, and Peyton [38] describe an almost- linear-time algorithm for determining the number of nonzeros in each row and column of L. Applications for computing these counts fast include comparisons of fill in alternative matrix orderings, preallocation of storage for a symbolic factorization, finding relaxed supernode partitions quickly, determining the load balance in parallel factorizations, and determining synchronization events in parallel factorizations.

The algorithm to compute row counts is based on Whitten’s characterization [73]. We are trying to compute |Li∗| = |Tr (i)|. The column indices j < i in row i of A define a subset of the vertices in the subtree of the elimination tree rooted at the vertex i, T [i]. The difficulty, of course, is counting the vertices in Tr (i) without enumerating them. The Gilbert-Ng-Peyton algorithm counts these vertices using three relatively simple mechanisms:

(1) processing the column indices j < i in row i of A in postorder of the etree, (2) computing the distance of each vertex in the etree from the root, and (3) setting up a data structure to compute the least-common ancestor (LCA) of pairs of etree vertices. It is not hard to show that the once these preprocessing steps are completed, |Tr (i)| can be computed using |Ai∗| LCA computations. The total cost of the preprocessing and the LCA computations is almost linear in |A|.

Gilbert, Ng, and Peyton show how to further reduce the number of LCA computations.

They exploit the fact that the leaves of Tr (i) are exactly the indices j that cause the creation of new supernodes in the Liu-Ng-Peyton supernode-finding algorithm [56]. This observation limits the LCA computations to leaves of row subtrees, i.e., edges in the skeleton graph G− (A). This significantly reduces the running time in practice.

Efficiently computing the column counts in L is more difficult. The Gilbert-Ng-Peyton algorithm assigns a weight w(j) to each etree vertex j, such that |L∗j | = ),k∈T [j] w(k).

Therefore, the column-count of a vertex is the sum of the column counts of its children, plus its own weight. Hence, wj must compensate for (1) the diagonal elements of the children, which are not included in the column count for j, (2) for rows that are nonzero in column j but not in its children, and (3) for duplicate counting stemming from rows that appear in more than one child. The main difficulty lies in accounting for duplicates, which is done using least-common-ancestor computations, as in the row-counts algorithm. This algorithm, too, benefits from handling only skeleton-graph edges.

Gilbert, Ng, and Peyton [38] also show in their paper how to optimize these algorithms, so that a single pass over the nonzero structure of A suffices to compute the row counts, the column counts, and the fundamental supernodes.

Three Classes of Factorization Algorithms

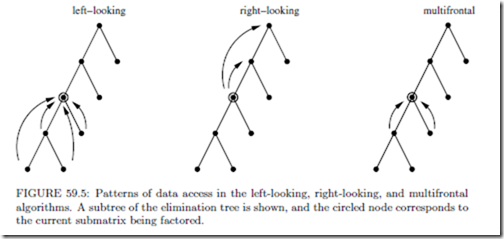

There are three classes of algorithms used to implement sparse direct solvers: left-looking, right-looking, and multifrontal; all of them use the elimination tree to guide the computation of the factors. The major difference between the first two of these algorithms is in how they schedule the computations they perform; the multifrontal algorithm organizes computations differently from the other two, and we explain this after introducing some concepts.

The computations on the sparse matrix are decomposed into subtasks involving computations among dense submatrices (supernodes), and the precedence relations among them are captured by the supernodal elimination tree. The computation at each node of the elimination tree (subtask) involves the partial factorization of the dense submatrix associated with it.

The right-looking algorithm is an eager updating scheme: Updates generated by the submatrix of the current subtask are applied immediately to future subtasks that it is linked to by edges in the filled graph of the sparse matrix. The left-looking algorithm is a lazy updating scheme: Updates generated by previous subtasks linked to the current subtask by edges in the filled adjacency graph of the sparse matrix are applied just prior to the factorization of the current submatrix. In both cases, updates always join a subtask to some ancestor subtask in the elimination tree. In the multifrontal scheme, updates always go from a child task to its parent in the elimination tree; an update that needs to be applied to some ancestor subtask is passed incrementally through a succession of vertices on the elimination tree path from the subtask to the ancestor.

Thus the major difference among these three algorithms is how the data accesses and the computations are organized and scheduled, while satisfying the precedence relations captured by the elimination tree. An illustration of these is shown in Fig. 59.5.

Scheduling Parallel Factorizations

In a parallel factorization algorithm, dependences between nonzeros in L determine the set of admissible schedules. A diagonal nonzero can only be factored after all updates to it from previous columns have been applied, a subdiagonal nonzero can be scaled only after updates to it have been applied (and after the diagonal element has been factored), and two subdiagonal nonzeros can update elements in the reduced system only after they have been scaled.

The elimination tree represents very compactly and conveniently a superset of these dependences. More specifically, the etree represents dependences between columns of L. A

column can be completely factored only after all its descendants have been factored, and two columns that are not in an ancestor-descendant relationship can be factored in any order. Note that this is a superset of the element dependences, since a partially factored column can already perform some of the updates to its ancestors. But most sparse elimination algorithms treat column operations (or row operations) as atomic operations that are always performed by a single processor sequentially and with no interruption. For such algorithms, the etree represents exactly the relevant dependences.

In essence, parallel column-oriented factorizations can factor the columns associated with different children of an etree vertex simultaneously, but columns in an ancestor-descendant relationship must be processed in postorder. Different algorithms differ mainly in how updates are represented and scheduled.

By computing the number of nonzeros in each column, a parallel factorization algorithm can determine the amount of computation and storage associated with each subtree in the elimination tree. This information can be used to assign tasks to processors in a load- balanced way.

Duff was the first to observe that the column dependences represented by the elimination tree can guide a parallel factorization [18]. In that paper Duff proposed a parallel multi- frontal factorization. The paper also proposed a way to deal with indefinite and asymmetric systems, similar to Duff and Reid’s sequential multifrontal approach [21]. For further references up to about 1997, see Heath’s survey [43]. Several implementations described in papers published after 1997 include PaStiX [45], PARADISO [68], WSSMP [42], and MUMPS [3], which also includes indefinite and unsymmetric factorizations. All four are message-passing codes.

Scheduling Out-of-Core Factorizations

In an out-of-core factorization at least some of the data structures are stored on external- memory devices (today almost exclusively magnetic disks). This allows such factorization algorithms to factor matrices that are too large to factor in main memory. The factor, which is usually the largest data structure in the factorization, is the most obvious candidate for storing on disks, but other data structures, for example the stack of update matrices in a multifrontal factorization, may also be stored on disks.

Planning and optimizing out-of-core factorization schedules require information about data dependences in the factorization and about the number of nonzeros in each column of the factor. The etree describes the required dependence information, and as explained above, it is also used to compute nonzero counts.

Following Rothberg and Schreiber [66], we classify out-of-core algorithms into robust algorithms and non-robust algorithms. Robust algorithms adapt the factorization to complete with the core memory available by performing the data movement and computations at a smaller granularity when necessary. They partition the submatrices corresponding to the supernodes and stacks used in the factorization into smaller units called panels to ensure that the factorization completes with the available memory. Non-robust algorithms assume that the stack or a submatrix corresponding to a supernode fits within the core memory provided. In general, non-robust algorithms read elements of the input matrix only once, read from disk nothing else, and they only write the factor elements to disk; Dobrian and Pothen refer to such algorithms as read-once-write-once, and to robust ones as read-many- write-many [15].

Liu proposed [53] a non-robust method that works as long as for all j = 1,... , n, all the nonzeros in the submatrix Lj:n,1:j of the factor fit simultaneously in main memory. Liu also shows in that paper how to reduce the amount of main memory required to factor a given matrix using this technique by reordering the children of vertices in the etree.

Rothberg and Schreiber [65, 66] proposed a number of robust out-of-core factorization algorithms. They proposed multifrontal, left-looking, and hybrid multifrontal/left-looking methods. Rotkin and Toledo [67] proposed two additional robust methods, a more efficient left-looking method, and a hybrid right/left-looking method. All of these methods use the etree together with column-nonzero counts to organize the out-of-core factorization process.

Dobrian and Pothen [15] analyzed the amount of main memory required for read-once- write-once factorizations of matrices with several regular etree structures, and the amount of I/O that read-many-write-many factorizations perform on these matrices. They also provided simulations on problems with irregular elimination tree structures. These studies led them to conclude that an external memory sparse solver library needs to provide at least two of the factorization methods, since each method can out-perform the others on problems with different characteristics. They have provided implementations of out-of- core algorithms for all three of the multifrontal, left-looking, and right-looking factorization methods; these algorithms are included in the direct solver library OBLIO [16].

In addition to out-of-core techniques, there exist techniques that reduce the amount of main memory required to factor a matrix without using disks. Liu [52] showed how to minimize the size of the stack of update matrices in the multifrontal method by reordering the children of vertices in the etree; this method is closely related to [53]. Another approach, first proposed by Eisenstat, Schultz and Sherman [23] uses a block factorization of the coefficient matrix, but drops some of the off-diagonal blocks. Dropping these blocks reduces the amount of main memory required for storing the partial factor, but requires recomputation of these blocks when linear systems are solved using the partial factor. George and Liu [29, Chapter 6] proposed a general algorithm to partition matrices into blocks for this technique. Their algorithm uses quotient graphs, data structures that we describe later in this chapter.

Comments

Post a Comment