Elimination Structures in Scientific Computing:Column Elimination Trees and Elimination DAGS

Column Elimination Trees and Elimination DAGS

Elimination structures for asymmetric Gaussian elimination are somewhat more complex than the equivalent structures for symmetric elimination. The additional complexity arises because of two issues. First, the factorization of a sparse asymmetric matrix A, where A is factored into a lower triangular factor L and an upper triangular factor U , A = LU , is less structured than the sparse symmetric factorization process. In particular, the relationship between the nonzero structure of A and the nonzero structure of the factors is much more complex. Consequently, data structures for predicting fill and representing data-flow and control-flow dependences in elimination algorithms are more complex and more diverse.

Second, factoring an asymmetric matrix often requires pivoting, row and/or column ex- changes, to ensure existence of the factors and numerical stability. For example, the 2-by-2 matrix A = [0 1; 1 0] does not have an LU factorization, because there is no way to eliminate the first variable from the first equation: that variable does not appear in the equation at all. But the permuted matrix PA does have a factorization, if P is a permutation matrix that exchanges the two rows of A. In finite precision arithmetic, row and/or column exchanges are necessary even when a nonzero but small diagonal element is encountered. Some sparse LU algorithms perform either row or column exchanges, but not both. The two cases are essentially equivalent (we can view one as a factorization of AT ), so we focus on row exchanges (partial pivoting). Other algorithms, primarily multifrontal algorithms, perform both row and column exchanges; these are discussed toward the end of this section. For completeness, we note that pivoting is also required in the factorization of sparse symmetric indefinite matrices. Such matrices are usually factored into a product LDLT , where L is lower triangular and D is a block diagonal matrix with 1-by-1 and 2-by-2 blocks. There has not been much research about specialized elimination structures for these factorization algorithms; such codes invariably use the symmetric elimination tree of A to represent dependences for structure prediction and for scheduling the factorization.

The complexity and diversity of asymmetric elimination arises not only due to pivoting, but also because asymmetric factorizations are less structured than symmetric ones, so a rooted tree can no longer represent the factors. Instead, directed acyclic graphs (dags) are used to represent the factors and dependences in the elimination process. We discuss elimination dags (edags) in Section 59.5.2.

Surprisingly, dealing with partial pivoting turns out to be simpler than dealing with the asymmetry, so we focus next on the column elimination tree, an elimination structure for LU factorization with partial pivoting.

The Column Elimination Tree

The column elimination tree (col-etree) is the elimination tree of AT A, under the assumption that no numerical cancellation occurs in the formation of AT A. The significance of this tree to LU with partial pivoting stems from a series of results that relate the structure of the LU factors of P A, where P is some permutation matrix, to the structure of the Cholesky factor of AT A.

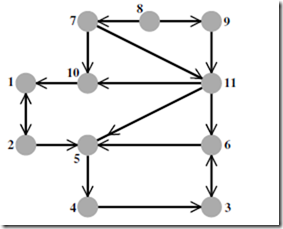

FIGURE 59.8: The directed graph G(A) of an asymmetric matrix A. The column intersection graph of this graph G∩(B) is exactly the graph shown in Figure 59.1, so the column elimination tree of A is the elimination tree shown in Figure 59.2. sparser than the column intersection graph.

George and Ng observed that, for any permutation matrix P , the structure of the LU factors of PA is contained in the structure of the Cholesky factor of AT A, as long as A does not have any zeros on its main diagonal [31]. (If there are zeros on the diagonal of a nonsingular A, the rows can always be permuted first to achieve a zero-free diagonal.) Figure 59.8 illustrates this phenomenon. Gilbert [32] strengthened this result by showing that for every nonzero Rij in the Cholesky factor R of AT A = RT R, where A has a zero-free diagonal and no nontrivial block triangular form, there exists a matrix A(Uij ) with the same nonzero structure as A, such that in the LU factorization of A(Uij ) = P T L(Uij )U (Uij ) with partial pivoting, U (Uij ) j= 0. This kind of result is known as a one-at-a-time result, since it guarantees that every element of the predicted factor can fill for some choice of numerical values in A, but not that all the elements can fill simultaneously. Gilbert and Ng [37] later generalized this result to show that an equivalent one-at-a-time property is true for the lower-triangular factor.

These results suggest that the col-etree, which is the elimination tree of AT A, can be used for scheduling and structure prediction in LU factorizations with partial pivoting. Because the characterization of the nonzero structure of the LU factors in terms of the structure of the Cholesky factor of AT A relies on one-at-a-time results, the predicted structure and predicted dependences are necessarily only loose upper bounds, but they are nonetheless useful.

The col-etree is indeed useful in LU with partial pivoting. If Uij j= 0, then by the results cited above Rij j= 0 (recall that R is the Cholesky factor of the matrix AT A). This, in turn, implies that j is an ancestor of i in the col-etree. Since column i of L updates column j of L and U only if Uij j= 0, the col-etree can be used as a task-dependence graph in columnoriented LU factorization codes with partial pivoting. This analysis is due to Gilbert, who used it to schedule a parallel factorization code [32]. The same technique is used in several current factorization codes, including SuperLU [12], SuperLU MT [13], UMFPACK 4 [9], and TAUCS [5, 40]. Gilbert and Grigori [33] recently showed that this characterization is tight in a strong all-at-once sense: for every strong Hall matrix A (i.e., A has no nontrivial block-triangular form), there exists a permutation matrix P such that every edge of the col-etree corresponds to a nonzero in the upper-triangular factor of P A. This implies that the a-priori symbolic column-dependence structure predicted by the col-etree is as tight as possible.

Like the etree of a symmetric matrix, the col-etree can be computed in time almost linear in the number of nonzeros in A [34]. This is done by an adaptation of the symmetric etree algorithm, an adaptation that does not compute explicitly the structure of AT A. Instead of constructing G(AT A), the algorithm constructs a much sparser graph Gt with the same elimination tree. The main idea is that each row of A contributes a clique to G(AT A); this means that each nonzero index in the row must be an ancestor of the preceding nonzero index. A graph in which this row-clique is replaced by a path has the same elimination tree, and it has only as many edges as there are nonzeros in A. The same paper shows not only how to compute the col-etree in almost linear time, but also how to bound the number of nonzeros in each row and column of the factors L and U , using again an extension of the symmetric algorithm to compute the number of nonzeros in the Cholesky factor of AT A. The decomposition of this Cholesky factor into fundamental supernodes, which the algorithm also computes, can be used to bound the extent of fundamental supernodes that will arise in L.

The col-etree represents all possible column dependences for any sequence of pivot rows. For a specific sequence of pivots, the col-etree includes dependences that do not occur during the factorization with these pivots. There are two typical situations in which the pivoting sequence is known. The first is when the matrix is known to have a stable LU factorization without pivoting. The most common case is when AT is strictly diagonally dominant. Even if A is not diagonally dominant, its rows can be pre-permuted to bring large elements to the diagonal. The permuted matrix, even if its transpose is not diagonally dominant, is fairly likely to have a relatively stable LU factorization that can be used to accurately solve linear systems of equations. This strategy is known as static pivoting [49]. The other situation in which the pivoting sequence is known is when the matrix, or part of it, has already been factored. Since virtually all sparse factorization algorithms need to collect information from the already-factored portion of the matrix before they factor the next row and column, a compact representation of the structure of this portion is useful.

Elimination dags (edags) are directed acyclic graphs that capture a minimal or near minimal set of dependences in the factors. Several edags have been proposed in the literature. There are two reasons for this diversity. First, edags are not always as sparse and easy to compute as elimination trees, so researchers have tried to find edags that are easy to compute, even if they represent a superset of the actual dependences. Second, edags often contain information only about a specific structure in the factors or a specific dependence in a specific elimination algorithm (e.g., data dependence in a multifrontal algorithm), so different edags are used for different applications. In other words, edags are not as universal as etrees in their applications.

The simplest edag is the graph G(LT ) of the transpose of the lower triangular factor, if we view every edge in this graph as directed from the lower-numbered vertex to a higher numbered vertex. This corresponds to orienting edges from a row index to a column index in L. For example, if L6,3 j= 0, we view the edge (6, 3) as a directed edge 3 → 6 in G(LT ). Let us denote by G((L(j−1) )T ) the partial lower triangular factor after j − 1 columns have been factored. Gilbert and Peierls showed that the nonzeros in the jth rows of L and U are exactly the vertices reachable, in G((L(j−1) )T ), from the nonzero indices in the jth column

FIGURE 59.9: The directed graph of the U factor of the matrix whose graph is shown in Figure 59.8. In this particular case, the graph of LT is exactly the same graph, only with the direction of the edges reversed. Fill is indicated by dashed lines. Note that the fill is indeed bounded by the fill in the column-intersection graph, which is shown in Figure 59.1. However, that upper bound is not realized in this case: the edge (9, 10) fills in the column- intersection graph, but not in the LU factors.

of A [39]. This observation allowed them to use a depth-first search (DFS) to quickly find the columns in the already-factored part of the matrix that update the jth column before it can be factored. This resulted in the first algorithm that factored a general sparse matrix in time linear in the number of arithmetic operations (earlier algorithms sometimes performed much more work to manipulate the sparse data structure than the amount of work in the actual numerical computations).

Eisenstat and Liu showed that a simple modification of the graph G((L(j−1) )T ) can often eliminate many of its edges without reducing its ability to predict fill [22]. They showed that if both Lik and Uki are nonzeros, then all the edges i → R for R > i can be safely pruned from the graph. In other words, the nonzeros in column k of L below row i can be pruned. This is true since if Uki j= 0, then column k of L updates column i, so all the pruned nonzeros appear in column i, and since the edge k → i is in the graph, they are all reachable when k is reachable. This technique is called symmetric pruning. This edag is used in the SuperLU codes [12, 13] to find the columns that update the next supernode (set of consecutive columns with the same nonzero structure in L). Note that the same codes use the col-etree to predict structure before the factorization begins, and an edag to compactly represent the structure of the already-factored block of A.

Gilbert and Liu went a step further and studied the minimal edags that preserve the reachability property that is required to predict fill [35]. These graphs, which they called the elimination dags are the transitive reductions of the directed graphs G(LT ) and G(U ). (The graph of U can be used to predict the row structures in the factors, just as G(LT ) can predict the column structures.) Since these graphs are acyclic, each graph has a unique transitive reduction; If A is symmetric, the transitive reduction is the symmetric elimination tree. Gilbert and Liu also proposed an algorithm to compute these transitive reductions row by row. Their algorithm computes the next row i of the transitive reduction of L by

traversing the reduction of U to compute the structure of row i of L, and then reducing it. Then the algorithm computes the structure of row i of U by combining the structures of earlier rows whose indices are the nonzeros in row i of L. In general, these minimal edags are often more expensive to compute than the symmetrically-pruned edags, due to the cost of transitively reducing each row. Gupta recently proposed a different algorithm for computing the minimal edags [41]. His algorithm computes the minimal structure of U by rows and of L by columns. His algorithm essentially applies to both L and U the rule that Gilbert and Liu apply to U . By computing the structure of U by rows and of L by columns, Gupta’s algorithm can cheaply detect supernodes that are suitable for asymmetric multifrontal algorithms, where a supernode consists of a set of consecutive indices for which both the rows of U all have the same structure and the columns of L have the same structure (but the rows and columns may have different structures).

Elimination Structures for the Asymmetric Multifrontal Algo- rithm

Asymmetric multifrontal LU factorization algorithms usually use both row and column exchanges. UMFPACK, the first such algorithm, due to Davis and Duff [10], used a pivoting strategy that factored an arbitrary row and column permutation of A. The algorithm tried to balance numerical and degree considerations in selecting the next row and column to be factored, but in principle, all row and column permutations were possible. Under such conditions, not much structure prediction is possible. The algorithm still used a clever elimination structure that we described earlier, a biclique cover, to represent the structure of the Schur complement (the remaining uneliminated equations and variables), but it did not use etrees or edags.

Recent unsymmetric multifrontal algorithms still use pivoting strategies that allow both row and column exchanges, but the pivoting strategies are restricted enough that struc- ture prediction is possible. These pivoting strategies are based on delayed pivoting, which was originally invented for symmetric indefinite factorizations. One such code, Davis’s UMFPACK 4, uses the column elimination tree to represent control-flow dependences, and a biclique cover to represent data dependences [9]. Another code, Gupta’s WSMP, uses conventional minimal edags to represent control-flow dependences, and specialized dags to represent data dependences [41]. More specifically, Gupta shows how to modify the minimal edags so they exactly represent data dependences in the unsymmetric multifrontal algorithm with no pivoting, and how to modify the edags to represent dependences in an unsymmetric multifrontal algorithm that employs delayed pivoting.

Comments

Post a Comment